Sustainable Green Energy Optimization for Edge Cloud Computing with Renewable Energy Resources

The rapid growth of edge cloud computing has brought about significant advancements in data processing and communication, yet it poses challenges in terms of energy consumption and environmental sustainability. This research proposes a novel approach to address these challenges by integrating renewable energy resources into edge cloud computing systems. The goal is to optimize the utilization of green energy sources while ensuring efficient operation and minimizing environmental impact.

The proposed framework leverages predictive analytics and optimization algorithms to dynamically allocate computational tasks and manage energy resources in edge cloud environments. By considering factors such as weather conditions, energy availability, and workload demands, the system aims to maximize the utilization of renewable energy sources while meeting performance requirements and minimizing operational costs.

Key components of the framework include intelligent workload scheduling, adaptive resource provisioning, and energy-aware task migration strategies. These techniques enable efficient utilization of renewable energy resources, reduce reliance on conventional power sources, and promote sustainability in edge cloud computing deployments.

To evaluate the effectiveness of the proposed approach, simulations and experiments are conducted using real-world workload traces and energy data. The results demonstrate significant improvements in energy efficiency, cost savings, and environmental sustainability compared to traditional approaches.

Overall, this research contributes to advancing the state-of-the-art in sustainable green energy optimization for edge cloud computing, offering practical solutions to mitigate environmental impact and enhance the long-term viability of modern computing infrastructures.

Aim and Objectives:

Aim:

The aim of this research is to develop a sustainable green energy optimization framework for edge cloud computing by integrating renewable energy resources, thereby reducing environmental impact and enhancing energy efficiency.

Objectives:

- To analyze the energy consumption patterns and workload characteristics in edge cloud computing environments.

- To identify and evaluate renewable energy resources suitable for integration into edge cloud infrastructures.

- To design and develop predictive analytics and optimization algorithms for dynamic energy management and workload scheduling.

- To implement and validate the proposed framework through simulations and real-world experiments.

- To assess the effectiveness of the framework in terms of energy efficiency, cost savings, and environmental sustainability.

- To compare the performance of the proposed approach with existing methods and evaluate its scalability and practical feasibility.

- To provide recommendations for deploying and optimizing sustainable edge cloud computing systems with renewable energy resources.

- To contribute insights and knowledge to the field of green computing and sustainable technology solutions.

Problem Definition:

The increasing demand for edge cloud computing services has led to a rise in energy consumption and carbon emissions, posing significant challenges to environmental sustainability. Traditional data centers and cloud computing infrastructures rely heavily on fossil fuel-based energy sources, contributing to greenhouse gas emissions and environmental degradation. Moreover, the dynamic nature of edge computing workloads exacerbates the energy inefficiency problem, as conventional systems struggle to adapt to fluctuating demands and energy availability.

The problem addressed in this research is the lack of sustainable energy optimization solutions tailored specifically for edge cloud computing environments. Existing approaches often overlook the unique characteristics of edge computing, such as decentralized architecture, resource constraints, and intermittent renewable energy sources. Consequently, there is a pressing need to develop innovative strategies that leverage renewable energy resources effectively while ensuring optimal performance, reliability, and cost-efficiency in edge cloud deployments.

This research aims to tackle these challenges by proposing a comprehensive framework for sustainable green energy optimization in edge cloud computing. By integrating renewable energy sources, such as solar and wind power, into edge computing infrastructures and leveraging predictive analytics and optimization algorithms, the goal is to mitigate the environmental impact, reduce reliance on fossil fuels, and promote energy-efficient operations.

Problem Statements:

- Energy Inefficiency in Edge Cloud Computing: The energy consumption of edge cloud computing infrastructures is often inefficient due to factors such as underutilization of resources, lack of dynamic energy management, and reliance on fossil fuel-based energy sources.

- Environmental Impact: The rapid expansion of edge cloud computing exacerbates environmental concerns, with conventional energy sources contributing to carbon emissions and environmental degradation.

- Renewable Energy Integration Challenges: Integrating renewable energy resources, such as solar and wind power, into edge cloud computing systems faces challenges such as intermittency, variability, and limited scalability, hindering their effective utilization.

- Workload Dynamics and Resource Allocation: The dynamic nature of edge computing workloads complicates resource allocation and energy management, leading to suboptimal performance and increased energy consumption.

- Cost Implications: Energy costs constitute a significant portion of the operational expenses in edge cloud computing, and inefficient energy utilization results in higher operational costs, impacting the economic viability of edge computing deployments.

- Lack of Sustainable Optimization Strategies: Existing optimization strategies for edge cloud computing often prioritize performance and cost over sustainability, neglecting the potential benefits of integrating renewable energy sources and minimizing environmental impact.

- Scalability and Practical Feasibility: Scalability and practical feasibility issues arise when implementing sustainable energy optimization solutions in edge cloud computing, including compatibility with existing infrastructures, hardware constraints, and regulatory considerations.

Importance of Edge Cloud Computing:

Energy Resource Efficiencies

Facebook’s energy-sensitive load balancer “Autoscale” reduces the number of servers that need to be on during low-traffic hours and specifically focuses on AI, which can run on smartphones in abundance and lead to decreased battery life through energy drain and performance issues.

The Autoscale technology leverages AI to enable energy-efficient inference on smartphones and other edge devices. The intention is for Autoscale to automate deployment decisions and decide whether AI should run on-device, in the cloud, or on a private cloud. Autoscale could result in both cost and efficiency savings.

Other energy resource efficiency programs are also underway in the edge computing space.

Reducing High Bandwidth Energy Consumption

Another area edge can assist in is to reduce the amount of data traversing the network. This is especially important for high-bandwidth applications like YouTube and Netflix, which have skyrocketed in recent years, and recent months in particular. This is partly due to the fact each stream is composed of a large file, but also due to how video-on-demand content is distributed in a one- to-one model. High bandwidth consumption is linked to high energy usage and high carbon emissions since it uses the network more heavily and demands greater power.

Edge computing could help optimize energy usage by reducing the amount of data traversing the network. By running applications at the user edge, data can be stored and processed close to the end user and their devices instead of relying on centralized data centers that are often hundreds of miles away. This will lead to lower latency for the end user and could lead to a significant reduction in energy consumption.

Smart Grids and Monitoring Can Lead to Better Management of Energy Consumption

Edge computing can also play a vital role in being an integral part of the enterprises to monitor and manage their energy conservation. Edge compute already supports many smart grid applications, such as grid optimization and demand management.

Allowing enterprises to track and monitor energy usage in real-time and visualize it through dashboards, enterprises can better manage their energy usage and put preventative measures in place to limit it where possible. This kind of real-time assessment of supply and demand can be particularly useful for managing limited renewable energy resources, such as wind and solar power.

Motivation Behind this Research:

In the past few years there is a need for greener environment for mankind. The sustainable growth of edge cloud computing is needed for the humankind. The greenhouse gas emission from the data center is hazard to the mankind and also the energy consumption in the data center.

Investigate energy-aware resource provisioning and allocation algorithms that provision data centre resources to client applications in a way that improves the energy efficiency of a data centre.

The power consumption of data centers has become a key issue. There is a need to create an efficient edge cloud computing system that utilizes the strength of the cloud while minimizing its energy footprint. Therefore, it is imperative to enhance the efficiency and potential sustainability of large data centers. One of the most important technologies is the use of virtualization. It is way too abstract the hardware and system resources from an operating system. In order to face this issue, green cloud computing is very useful for enhancing the efficiency and potential sustainability of large data centers. As the prevalence of cloud computing continues to raise, the need for power saving mechanisms also raises. Even the most efficiently built datacenter with the highest utilization rates will only mitigate, rather than eliminate, harmful CO2 emissions. The reason given is that Cloud providers are more interested in electricity cost reduction rather than carbon emission.

Need of the Hour Green Energy:

- The global climate crisis requires significant innovations in clean energy deployed at scale globally. Although there has been significant progress and thought leadership across the energy transition, there is still a major gap in the path for achieving net-zero goals. There is a new global energy economy emerging with the promise that successful net zero focused partnerships could create a market for clean technologies.

- Our need is to run on carbon-free energy, 24/7, over the data centers around the world to transition to more carbon-free and sustainable systems.

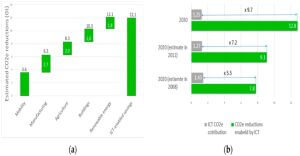

Estimated: (a) contribution of different industry sectors to global carbon-dioxide equivalent (CO2e) reduction by 2030, (b) information and communications technology (ICT) sector CO2e “footprint” contribution and enabled reductions to global CO2e emissions.

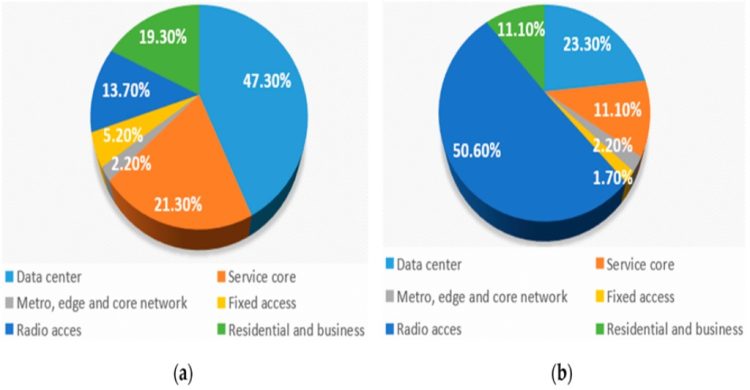

Estimated network energy consumption for main communication sectors in: (a) 2013 and (b) 2025

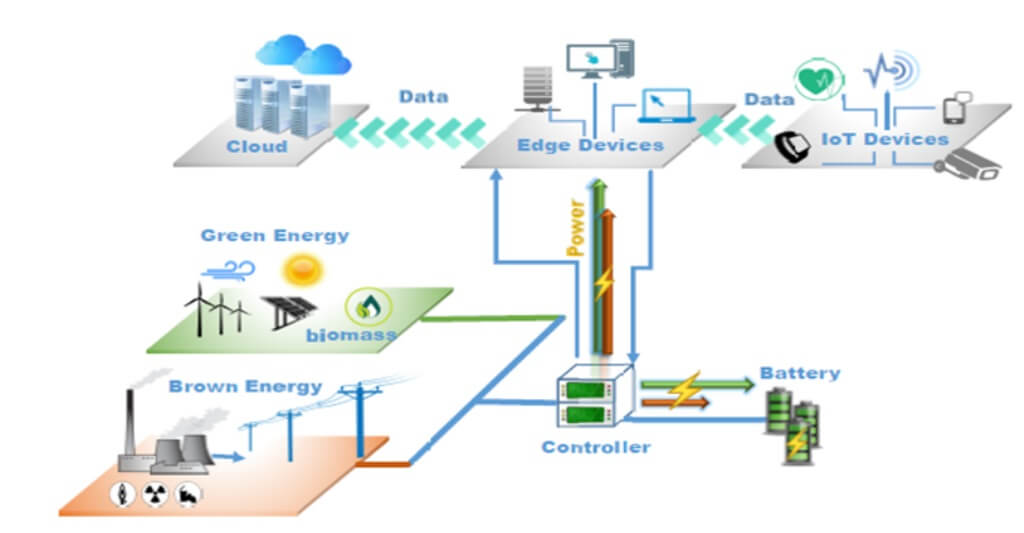

Reference Architecture of Green Edge Cloud Computing System:

Energy Optimization at Edge:

Edge intelligence can contribute to optimize data usage and reduce the amount of data needing to traverse the network, leading to a reduction of energy consumption and carbon emissions. For example, optimization of workloads will significantly reduce energy consumption related to data transport and decrease a need for physical transport (less CO2).

Applications enriched by new edge capabilities can satisfy user needs through a more sustainable use of environmental resources enabling reduction of energy consumption and carbon emissions.

Based on predictions performed by Edge intelligence algorithms, data and computation can be brought closer to the sites where green energy is available (i.e. optimal use of green energy). This also create new ways to continuously develop cyber security and data privacy aspects.

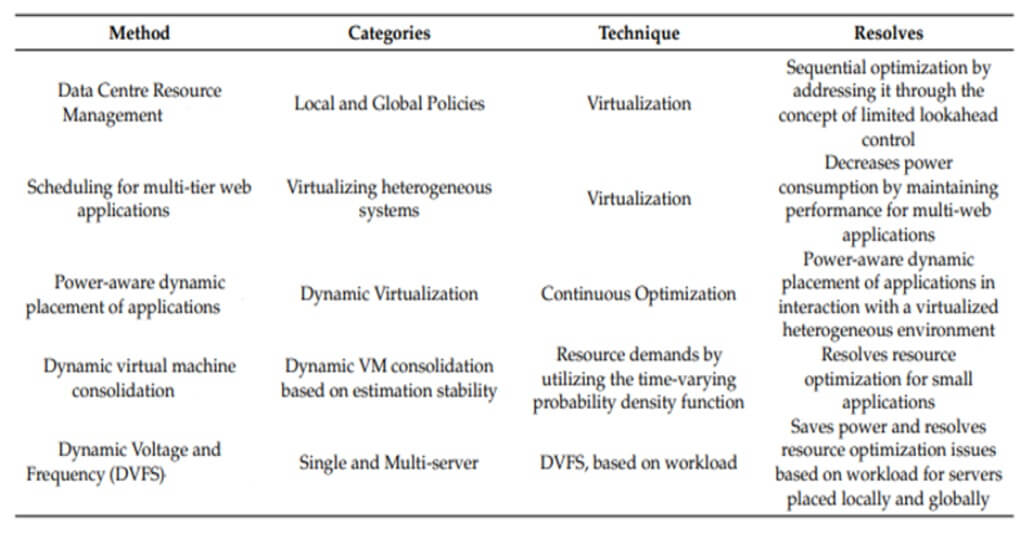

Energy Optimization Methods:

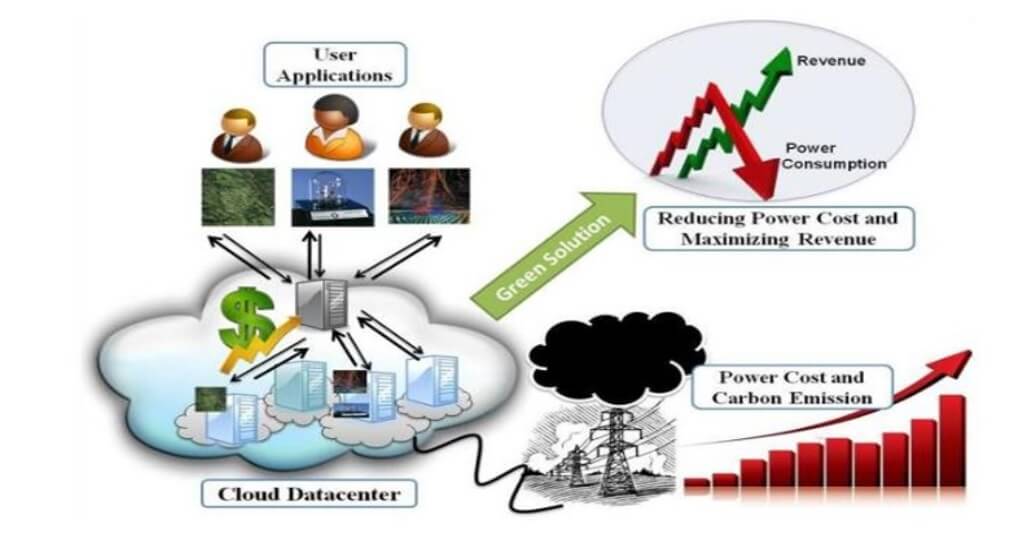

Green Solutions:

Optimization of Green Edge Cloud Computing:

There are 4 pillars of optimization of Green Edge Cloud Computing. They are described below:

- Energy Resources:This consideration comes under cloud providers’ control where cloud infrastructure is powered by renewable energy.

- Energy Efficiency:Tech giants like Microsoft have adopted effective approaches of cooling like building data centers underwater to make cloud infrastructure energy efficient.

- Number and Size of Servers: Organizations can optimize the performance of their applications and reduce the number of servers ultimately reducing the storage and decreasing the carbon footprint.

- Reducing the Data Transfer: By optimizing the caching process, reloading only the needed components, and prioritizing mobile-first experience, the data required can be reduced.

Proposed Methodology for Energy Optimization at Data Centers:

Sustainable Edge Cloud Energy Management Framework (SECEM): SECEM is a pioneering framework designed to optimize energy consumption and resource allocation in edge cloud environments by integrating predictive analytics and optimization algorithms. By dynamically allocating computational tasks and managing energy resources, SECEM aims to enhance energy efficiency, reduce environmental impact, and ensure sustainable operation of edge cloud infrastructures.

Framework Components:

- Predictive Analytics Module:

Data Collection: SECEM collects real-time data on workload patterns, energy availability, and environmental factors using sensors and IoT devices deployed across edge cloud nodes.

Data Processing: Utilizing machine learning algorithms, SECEM analyzes historical data to forecast future workload demands, energy generation from renewable sources, and environmental conditions.

Workload Prediction: SECEM predicts future computational workload patterns based on historical data, enabling proactive resource allocation and energy management.

2. Optimization Algorithms:

Energy-Aware Task Allocation: SECEM employs optimization algorithms to dynamically allocate computational tasks to edge cloud nodes based on predicted workload and energy availability. Tasks are assigned to nodes with sufficient renewable energy resources to minimize reliance on conventional power sources.

Resource Provisioning: SECEM optimizes resource provisioning by dynamically scaling computing and storage resources according to workload demand and available energy resources. It ensures efficient utilization of hardware resources while maintaining performance requirements.

Task Migration Strategies: SECEM implements energy-aware task migration strategies to balance workload distribution and energy consumption across edge cloud nodes. Tasks may be migrated between nodes to leverage renewable energy availability and reduce energy wastage.

3. Decision-Making Engine:

Optimization Objectives: SECEM’s decision-making engine balances multiple objectives, including energy efficiency, performance optimization, cost reduction, and environmental sustainability.

Dynamic Decision Making: SECEM dynamically adjusts resource allocation and task scheduling decisions in response to changing workload conditions, energy availability, and environmental factors.

4. Integration with Renewable Energy Sources:

SECEM integrates with renewable energy sources, such as solar panels and wind turbines, to leverage green energy generation for powering edge cloud infrastructures.

Energy Storage Integration: SECEM incorporates energy storage systems, such as batteries or capacitors, to store surplus renewable energy for use during periods of low energy availability.

Benefits:

Enhanced Energy Efficiency: SECEM optimizes energy consumption by leveraging predictive analytics and optimization algorithms to dynamically allocate computational tasks and manage energy resources.

Reduced Environmental Impact: By integrating renewable energy sources and minimizing reliance on fossil fuels, SECEM reduces carbon emissions and promotes environmental sustainability.

Cost Savings: SECEM optimizes resource allocation and energy management, resulting in reduced operational costs for edge cloud deployments.

Scalability and Flexibility: SECEM’s modular architecture enables seamless integration with existing edge cloud infrastructures and accommodates future scalability requirements.

SECEM represents a significant advancement in sustainable energy management for edge cloud computing, offering a holistic approach to optimize energy consumption, reduce environmental impact, and ensure the long-term sustainability of edge computing infrastructures.

Conclusion:

Sustainable Edge Cloud Energy Management Framework (SECEM) represents a significant advancement in addressing the challenges of energy consumption and environmental sustainability in edge cloud computing. By integrating predictive analytics and optimization algorithms, SECEM offers a comprehensive approach to dynamically allocate computational tasks and manage energy resources, ultimately enhancing energy efficiency and reducing environmental impact.

Throughout this framework, several key components work in synergy to achieve sustainable energy management:

Data Collection and Predictive Analytics: SECEM collects real-time data on workload patterns, energy availability, and environmental factors, and employs predictive analytics to forecast future demands. This enables proactive decision-making and efficient resource allocation.

Optimization Algorithms: SECEM utilizes optimization algorithms to dynamically allocate tasks, provision resources, and migrate workloads based on predicted workload and energy availability. This ensures optimal performance while minimizing energy consumption and costs.

Decision-Making Engine: The decision-making engine of SECEM balances multiple objectives, including energy efficiency, performance optimization, cost reduction, and environmental sustainability. It continuously adapts to changing conditions to optimize resource utilization and energy consumption.

Integration with Renewable Energy Sources: SECEM integrates with renewable energy sources such as solar and wind power, leveraging green energy generation to power edge cloud infrastructures. Additionally, energy storage systems are employed to store surplus renewable energy for use during periods of low energy availability.

By implementing SECEM, organizations can realize several benefits:

Enhanced Energy Efficiency: SECEM optimizes energy consumption and reduces reliance on fossil fuels, resulting in improved energy efficiency and reduced operational costs.

Environmental Sustainability: SECEM reduces carbon emissions and promotes environmental sustainability by integrating renewable energy sources and minimizing environmental impact.

Cost Savings: SECEM optimizes resource allocation and energy management, leading to cost savings in edge cloud computing deployments.

Scalability and Flexibility: SECEM’s modular architecture allows for seamless integration with existing infrastructures and accommodates scalability requirements.

SECEM offers a holistic and effective solution to the challenges of sustainable energy management in edge cloud computing. By leveraging predictive analytics, optimization algorithms, and renewable energy sources, SECEM paves the way for a greener, more efficient, and sustainable future for edge computing infrastructures.

References:

- Sergio Pérez, Patricia Arroba, José M. Moya, “Energy-conscious optimization of Edge Computing through Deep Reinforcement Learning and two-phase immersion cooling”, Future Generation Computer Systems, 125 (2021) 891–907.

- Sujie Shao, Jiajia Tang, Shuang Wu, Jianong Li, Shaoyong Guo and Feng Q,” Delay and Energy Consumption Optimization Oriented Multi-Service Cloud Edge Collaborative Computing Mechanism in IoT”, Journal of Web Engineering, Vol. 20 8, 2433–2456, River Publishers.

- Xianzhong Tian, Lu Zhou, and Ting Xu “Global Energy Optimization Strategy Based on Delay Constraints in Edge Computing Environment”, MSWiM ’21, November 22–26, 2021, Alicante, Spain.

- Soumya Ranjan Jena, Raju Shanmugam, Rajesh Kumar Dhanaraj, Kavita Saini, “Recent Advances and Future Research Directions in Edge Cloud Framework” International Journal of Engineering and Advanced Technology (IJEAT) ISSN: 2249-8958 (Online), Volume-9 Issue-2, December, 2019.

- Muhammad Arif , F. Ajesh, Shermin Shamsudheen , and Muhammad Shahzad, “Secure and Energy-Efficient Computational Offloading Using LSTM in Mobile Edge Computing” Hindawi Security and Communication Networks, Volume 2022, Article ID 4937588.

- Bingyi Hu, Jixun Gao, Yanxin Hu, Huaichen Wang, Jialei Liu, “Overview of Energy Consumption Optimization in Mobile Edge Computing”, ECCST-2021, IOP Publishing.

- Defeng Li, Mingming Lan, Yuan Hu, “Energy-saving service management technology of internet of things using edge computing and deep learning”, Complex & Intelligent Systems, Springer, 2022, https://doi.org/10.1007/s40747-022-00666-0

- Yunbo Li, Anne-C´ecile Orgerie, Ivan Rodero, Betsegaw Lemma Amershoa, Manish Parashar, Jean-Marc Menaud “End-to-end Energy Models for Edge Cloud-based IoT Platforms: Application to Data Stream Analysis in IoT”, Future Generation Computer Systems, Elsevier, 2018, 87, pp.667-678.

- FeiFei Chen; Jean-Guy Schneider; Yun Yang; John Grundy; Qiang H, “An energy consumption model and analysis tool for Cloud computing environments”, 2012 First International Workshop on Green and Sustainable Software (GREENS), IEEE.

- C. Peoples; G. Parr; S. McClean; P. Morrow; B. Scotney, “Energy aware scheduling across ‘green’ cloud data centres”, 2013 IFIP/IEEE International Symposium on Integrated Network Management (IM 2013), IEEE.

- C. Peoples; G. Parr; S. McClean; B. Scotney; P. Morrow; S.K. Chaudhari; R. Theja, “An Energy Aware Network Management Approach Using Server Profiling in ‘Green’ Clouds”, 2012 Second Symposium on Network Cloud Computing and Applications, IEEE.

- Daniel Guimaraes do Lago, “Power-aware virtual machine scheduling on clouds using active cooling control and DVFS”, MGC ’11: Proceedings of the 9th International Workshop on Middleware for Grids, Clouds and e-Science, December 2011 Article No.: 2, Pages 1–6.

- T Arthi; H Shahul Hamead, “Energy aware cloud service provisioning approach for green computing environment”, 2013 International Conference on Energy Efficient Technologies for Sustainability, IEEE.

- Eugen Feller; Louis Rilling; Christine Morin, “Energy-Aware Ant Colony Based Workload Placement in Clouds”, 2011 IEEE/ACM 12th International Conference on Grid Computing, IEEE. 4gk]\7

About the Author:

Mr. Soumya Ranjan Jena,

Assistant Professor, School of Computing and Artificial Intelligence

NIMS University, Jaipur